Introduction: The Shift from Prediction to Action

Artificial intelligence is undergoing a quiet but profound shift. For the past decade, most enterprise AI systems have been designed to predict: classify a document, rank a lead, recommend an offer, generate a response. These systems operate within well-defined boundaries. They take an input, compute a prediction, and return an output.

Agentic AI is different. Instead of simply predicting, agentic systems are built to act. They interpret goals, design their own sequence of steps, decide which tools or systems to call, gather information as they go, and adapt their behavior based on what they observe. Rather than being embedded in a single point of a workflow, they move through the workflow itself.

This distinction matters. Traditional automation and RPA follow predefined instructions exactly; they are deterministic machines of certainty. Agentic AI, on the other hand, is probabilistic. Given the same goal and similar inputs, an agent may choose different paths depending on context, data availability, or its own confidence. That flexibility allows it to handle variety and incomplete information—but it also introduces new forms of uncertainty.

As enterprises rush to explore “AI agents,” it is tempting to treat them as simply smarter automation. In practice, the difference is fundamental. Automation knows what to do. Agents figure out what to do next. The organizations that recognize this distinction early will be better positioned to manage both the upside and the risk of this new class of systems.

What Is Agentic AI in Practice?

1 From Scripts to Goal-Seeking Systems

Agentic AI systems start from a goal rather than a script. When asked to resolve a support ticket, triage an insurance claim, or prepare a compliance dossier, an agent does more than generate a single response. It breaks the goal into tasks, plans a sequence of actions, interacts with tools and applications, and adjusts its plan as new information emerges.

Under the hood, a typical agent spans several layers:

- a foundation model providing reasoning, language understanding, and generative capabilities;

- a planning layer that decomposes tasks and adapts when outcomes diverge from expectations;

- a tool and action layer that calls APIs, databases, browsers, and enterprise systems;

- a memory layer that holds context and integrates enterprise knowledge;

- an observation layer that inspects tool outputs and detects unexpected states;

- a safety layer that enforces constraints and permissions; and

- an orchestration layer that coordinates agents and embeds them into existing workflows.

When these layers work in harmony, agents can behave like semi-autonomous digital operators inside the enterprise. When they do not, reliability issues become visible quickly.

2 Where Agents Are Already Creating Value

Although the concept feels new, agentic patterns are already emerging across industries.

In customer support, agents can retrieve account histories, search knowledge bases, interpret customer questions, and draft tailored responses. Human agents then review and send the final answer, especially in ambiguous or sensitive cases. In insurance, agents can read lengthy claim submissions, extract key details, check them against policy terms, identify missing documentation, and assemble case summaries for adjudicators, dramatically reducing the manual preparation time.

Financial institutions are beginning to rely on agents for pre-processing compliance workflows. An agent can perform the first pass of KYC verification, flag inconsistencies in documents, cross-reference sanctions lists, and compile evidence for analysts to evaluate. In research-intensive roles, agents help teams scan large volumes of reports, extract insights, and synthesize findings, freeing experts to focus on interpretation and decision-making rather than manual reading.

Developer and DevOps teams are also experimenting with agents that can refactor code, run tests, analyze error logs, and suggest fixes. Similarly, in operations and back-office environments, agents can triage email inboxes, extract tasks, update CRMs, adjust schedules, and generate periodic summaries.

Across all these examples, a common pattern emerges: agents handle the structured, repeatable, and context-heavy parts of an activity, while humans retain responsibility for judgment, edge cases, and exceptions.

Agentic AI on the Gartner Hype Cycle

1 Where We Are Today

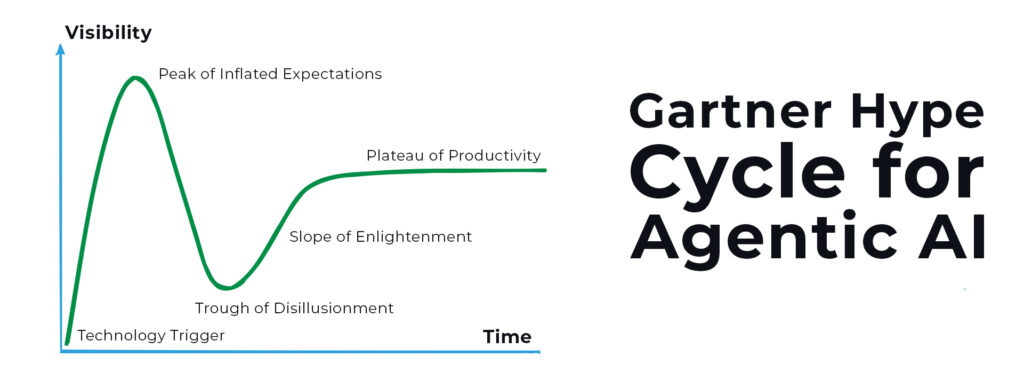

Agentic AI has moved rapidly from research prototypes into the center of enterprise AI roadmaps. Over the past few years, tool-enabled large language models, planning frameworks, memory systems, and autonomous workflow agents have entered the mainstream developer ecosystem. This period—roughly 2022 to 2023—served as the “innovation trigger,” when early adopters began experimenting with multi-step agents and sharing promising results.

Today, we are at or near the “peak of inflated expectations.” Many organizations talk about agents as “AI employees,” capable of taking on complex, multi-step workflows end to end. Demos can be impressive, and early pilots have produced meaningful productivity gains. At the same time, real-world deployments are revealing the limits of the technology. Agents can loop, stall, misinterpret ambiguous instructions, or fail to recover gracefully when tools behave unexpectedly.

This is not a sign that the technology is flawed. It is a normal stage in the Hype Cycle: expectations have leapt ahead of operational maturity.

2 What Comes Next

As more enterprises scale pilots, we should expect a phase of recalibration—a “trough of disillusionment” in which organizations realize that agents are not drop-in replacements for humans nor perfect substitutes for deterministic automation. This phase is likely to be characterized by uneven reliability, confusion over what agents should and should not handle, and a growing understanding that governance, evaluation, and oversight are not optional.

From there, the market will begin its climb along the “slope of enlightenment.” Architectures will improve, best practices will emerge, and organizations will adopt clearer patterns for scoping agents, designing guardrails, managing memory, and defining oversight. Vendors and practitioners will develop more standardized evaluation methods and risk frameworks.

At the “plateau of productivity,” agents will not be science fiction AI employees. Instead, they will be stable, semi-autonomous digital operators embedded into carefully scoped workflows. They will handle structured, high-volume parts of a process, while human experts provide direction, make judgment calls, and monitor outcomes.

3 Hype vs. Reality

A balanced view of Agentic AI rests on four observations.

- First, the underlying capabilities are genuinely advancing. Agents can already plan multi-step workflows, interact with APIs and databases, maintain context across steps, and take meaningful actions within enterprise systems. This goes beyond what traditional automation or single-step model inference can offer.

- Second, reliability is uneven. Agents can produce different plans for similar tasks, misread ambiguous instructions, mishandle tool outputs, or persist incorrect assumptions across multiple steps. In regulated or high-stakes contexts, this variability is the central challenge.

- Third, fully autonomous AI workers are unlikely in the near term. Current architectures struggle with nuanced risk assessment, handling rare events, and interpreting conflicting signals in a way that meets the standards of regulated industries.

- Finally, semi-autonomous agents operating under clear constraints are viable today. When workflows are well-bounded: drafting responses, preparing claims dossiers, pre-processing financial checks, extracting data from documents, generating research summaries—agents can deliver significant value safely.

The near-term future is not full autonomy; it is guided autonomy.

A Framework for Reliability: Eight Pillars of Agentic Governance

To move from promising demos to dependable systems, enterprises need a structured way to think about agent reliability and governance. A practical way to do this is through eight complementary pillars.

- The first pillar, performance verification, focuses on whether agents actually behave as intended. This includes assessing if they can complete tasks end to end, how often they diverge or stall, and whether they respond consistently to similar inputs. For instance, a claims triage agent might perform well on standard cases yet struggle with multi-injury scenarios; systematic verification brings such weaknesses to light before they impact customers.

- Explainability, the second pillar, is about transparency. Enterprises need to understand why an agent chose a particular action or recommendation and whether the rationale aligns with internal policies. A compliance agent that can clearly indicate which sanctions lists triggered a flag and why gives analysts a concrete basis for validation rather than a black-box suggestion.

- The third pillar, synthetic data and scenario curation, helps stress-test agents in controlled but challenging situations. By exposing agents to rare events, ambiguous inputs, conflicting signals, or inconsistent tool outputs, organizations can observe how they behave under pressure and identify where additional guardrails or decision rules are needed.

- Bias and harm mitigation form the fourth pillar. Agents should not inadvertently favor particular demographics, writing styles, or linguistic patterns. For example, a loan pre-processing agent must not conflate the quality of a customer’s written communication with their creditworthiness. Here, both data and behavior need to be monitored continuously.

- The fifth pillar is safety and guardrails. This includes defining which actions an agent is allowed to take, what tools it may access, what thresholds trigger escalation, and where hard stops are enforced. A DevOps agent might be permitted to create and modify test infrastructure but never to deploy directly to production systems without explicit human approval.

- The sixth pillar addresses tooling and environment orchestration. Agents depend on external systems that change over time. APIs may evolve, response formats may shift, and temporary failures or slowdowns may occur. Reliable agents must be designed to detect and respond to these changes gracefully, pausing or escalating when conditions deviate from expectations rather than proceeding blindly.

- Knowledge and memory governance is the seventh pillar. Agents that rely on outdated documentation, misremember earlier steps, or persist hallucinated facts can introduce subtle and dangerous errors. Governance practices must ensure that retrieved content is current and trustworthy, that long-running workflows manage state correctly, and that “memory contamination” does not accumulate over time.

- Finally, human–agent collaboration is the eighth pillar. Reliable systems are explicit about which tasks agents handle autonomously, when they escalate to human reviewers, how uncertainty is modeled, and how feedback from humans feeds back into system improvement. A support agent might handle routine warranty inquiries fully but escalate anything touching discretionary refunds or exceptions.

Together, these pillars form a practical foundation for deploying agentic systems in a way that is not only powerful but also predictable, auditable, and aligned with enterprise risk appetite.

The Role of Human Oversight: How Autonomy Becomes Trustworthy

Perhaps the most important realization about Agentic AI is that human oversight is not a temporary crutch; it is a structural requirement. Agents operate through probabilistic reasoning, in environments full of ambiguity, incomplete information, and edge cases. Under those conditions, human judgment is not optional—it is complementary.

Oversight appears at multiple stages of the agent lifecycle. During design, humans define what the agent is allowed to do, where it must not act, which tools it may access, and which policies constrain its behavior. They determine, for example, that an agent may draft refund recommendations but cannot issue refunds, or that an agent may propose changes to infrastructure but cannot apply them without review.

During execution, oversight becomes more dynamic. Humans may approve or reject individual high-risk actions, intervene when an agent appears to be in a loop, or resolve ambiguous cases that the agent cannot reliably interpret. Confidence-aware designs allow agents to escalate when their own uncertainty is high, handing control back to humans rather than forcing a brittle decision.

After the fact, humans play a critical role in evaluation and continuous improvement. They review logs, grade outputs, categorize errors, and fine-tune prompts, decision rules, or tool interfaces. This ongoing feedback loop enables agents to become steadily more reliable without pretending that they will ever operate perfectly in all conditions.

Crucially, oversight is not the enemy of autonomy. It is what enables autonomy to scale responsibly. With the right oversight models, enterprises can allow agents to take on more complex tasks over time, knowing that humans are still in position to catch misaligned behavior, correct errors, and prevent cascading failures. Over the long run, oversight evolves from frequent intervention to exception review and periodic auditing, but it never disappears.

Conclusion: Guided Autonomy as the Enterprise Path Forward

Agentic AI marks a new chapter in enterprise technology. Instead of embedding intelligence in isolated predictions, organizations can now deploy systems that reason, act, and adapt inside real workflows. The potential productivity gains are substantial, especially in domains where processes are complex, information-rich, and partially structured.

However, the same qualities that make agents powerful also make them unpredictable. They can misinterpret nuance, mis-handle tools, persist incorrect assumptions, or optimize aggressively for the wrong objective. Left unchecked, these systems can drift away from business intent and regulatory requirements.

The path forward is not to shy away from agents, nor to treat them as replacements for deterministic automation. It is to embrace guided autonomy: agents that operate within clearly defined boundaries, supported by robust governance frameworks and active human oversight.

Enterprises that approach Agentic AI in this way – acknowledging both its promise and its limits – will be able to turn hype into durable advantage. They will move beyond impressive demos to build real, resilient, and trustworthy agentic capabilities at scale.