Why Multi-Sensor Data Annotation Needs More Than Just Automation

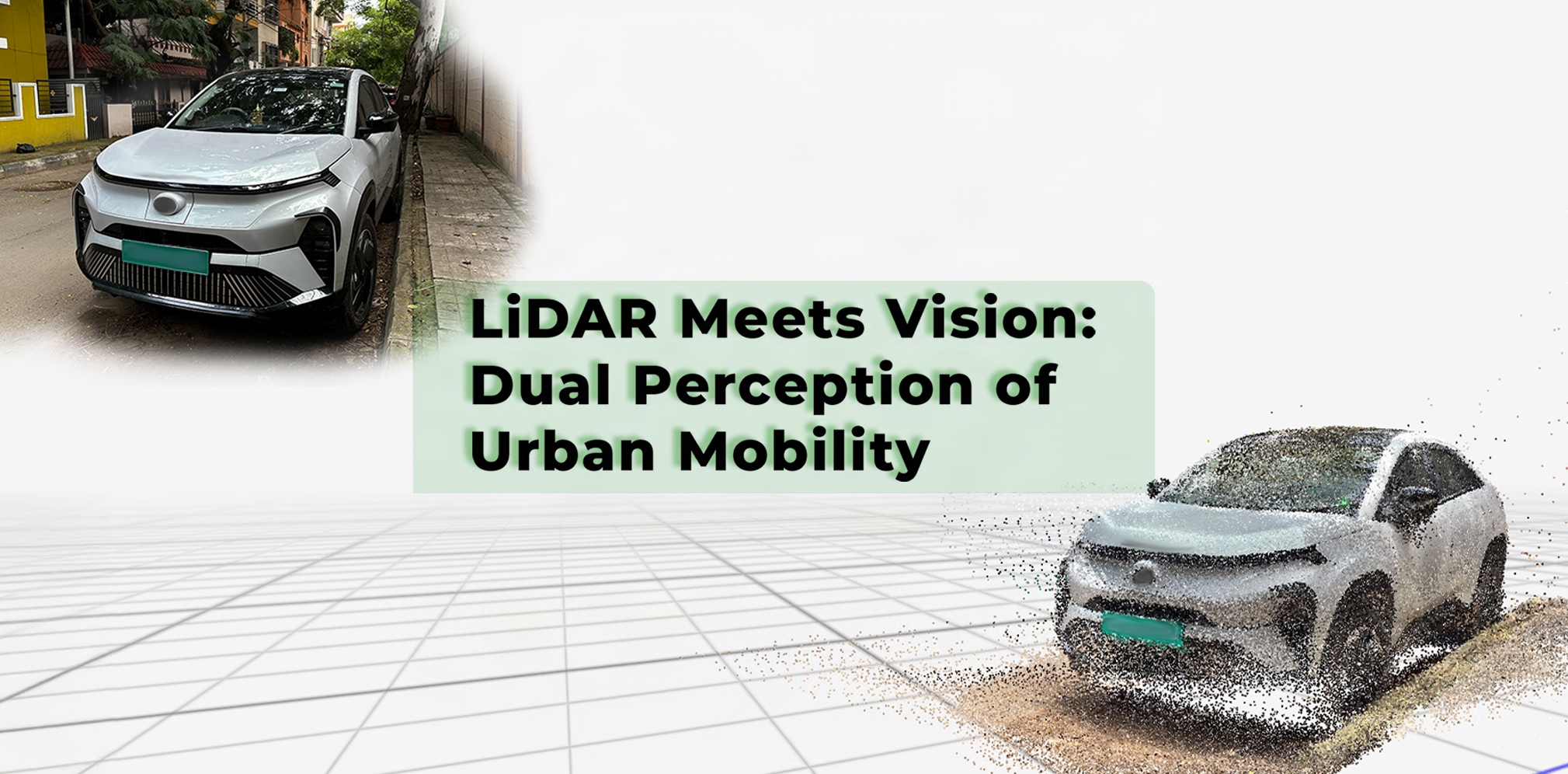

AI perception now means understanding context, environment, depth, and movement from many sensors. From self-driving cars to smart cities, intelligent systems rely on fused sensor data to operate safely and accurately.

The richer the data, the harder it becomes to label and interpret correctly.

At NextWealth, we specialize in high-precision Human-in-the-Loop (HITL) annotation for multi-sensor datasets. We achieve this in partnership with our advanced tooling partner, Taskmonk, whose flexible annotation platform enables us to deliver high-quality, multimodal datasets at scale.

Understanding Multi-Sensor Data: Building Blocks of Intelligent Perception

Multi-sensor data combines inputs from different sensing modalities, like:

LiDAR – for spatial depth and 3D geometry.

Cameras – for texture, colour, and visual cues.

Radar – for object velocity and distance in low visibility.

Thermal imaging – for detecting heat signatures, especially at night or in emergency scenarios.

IMU (Inertial Measurement Units) and GPS – for movement tracking and geolocation.

The goal of fusing this data is to provide AI with a 360-degree situational understanding, just like humans rely on multiple senses to make informed decisions.

Each sensor brings unique capabilities, but it also introduces different data types, sampling rates, formats, and fields of view, making annotation significantly more complex than single-sensor labeling.

The Hidden Complexity Behind Multi-Sensor Labeling

Let’s break it down into four major pain points:

Temporal & Spatial Misalignment

Sensors operate at different frame rates and are mounted at different locations on a vehicle or device. A LiDAR may capture at 10 Hz, while a camera captures at 30 Hz, and an IMU may stream data continuously.

Aligning these streams accurately in both space and time is a non-trivial task. Even a few milliseconds of drift or positional misalignment can lead to incorrect object annotations, making the model learn faulty associations.

Calibration & Sensor Drift

For the data streams to be meaningfully fused, all sensors need precise calibration. Over time, physical shifts due to vibrations, temperature changes, or mechanical wear can throw off alignment.

Example: A slight angle shift in a LiDAR unit might cause a car in the environment to appear several centimetres off in the 3D point cloud, resulting in incorrect annotations unless a human catches it.

Environmental Variability & Edge Cases

Multi-sensor data often reflects real-world, unpredictable environments:

- Glare affects cameras.

- Rain distorts LiDAR reflections.

- Fog impacts thermal readings.

- Nighttime drastically alters colour and depth visibility.

Automation often fails in such non-ideal conditions. Annotators must interpret complex overlaps, partial occlusions, or lighting anomalies to maintain dataset integrity.

Sheer Volume of Data

A single autonomous vehicle can generate terabytes of data per day. These include synchronized video, LiDAR sweeps, radar pulses, IMU logs, and GPS trails.

Manually labeling such a massive, fused dataset with high accuracy requires not only a workforce scale but also intelligent workflow design, annotation tools, and stringent QA protocols.

Why Human Judgment is Irreplaceable in Multi-Sensor Annotation

AI-assisted tools can accelerate annotation only to an extent. HITL ensures the accuracy, context awareness, and error correction that automation alone cannot provide.

Here’s where HITL makes a difference:

- Contextual understanding: A human can recognize that a pedestrian partly obscured by a parked truck is still a potential hazard. Automation might miss this nuance.

- Cross-modal verification: Annotators align labels across LiDAR and RGB, ensuring that what the AI “sees” is consistent in all views.

- Edge-case detection: Human reviewers can catch rare or ambiguous situations that automation hasn’t seen before.

At NextWealth, HITL is at the heart of our multi-sensor annotation pipeline. It’s what turns noisy, fragmented data into high-confidence ground truth.

Powering Precision: How NextWealth & Taskmonk Deliver Together

Our pipeline is powered by Taskmonk, a robust annotation platform optimized for multi-modal data. Key capabilities include:

- Real-time LiDAR-RGB sync viewers

- Radar heatmap overlays

- Temporal navigation across sensor streams

- Motion trail visualization from IMU and GPS

- Intelligent frame-to-frame interpolation: The platform automatically handles interpolation between frames when appropriate, especially in time-series data like video or LiDAR sweeps.

- Minimizing repetitive manual labeling across consecutive frames. This significantly reduces annotation fatigue and overall AHT, while ensuring continuity and spatial accuracy across frames.

- Configurable reviewer protocols

Taskmonk’s intuitive interface, combined with NextWealth’s trained teams, ensures high annotation velocity with low error rates. It also enables seamless client collaboration, QA checklists, and secure data governance.

Our Proven Multi-Sensor Annotation Framework

We’ve built our operations to meet the specific needs of multi-sensor annotation:

Advanced Multi-Modal Tooling

We use tools like TaskMonk, which are customized for multi-stream annotation. Annotators can:

- Visualize LiDAR and RGB in sync

- Overlay radar heat maps

- Toggle between temporal frames

- Align IMU motion trails with camera feeds

- Domain-Trained Annotators

Our teams undergo structured training specific to industries like:

ADAS (Advanced Driver Assistance Systems)

- Indoor Robotics

- Smart city surveillance

They understand sensor behaviour, calibration logic, and application-specific annotation rules.

Smarter Labeling: AI-Assisted, Human-Verified

We use AI-powered pre-labelling pipelines for common objects (e.g., vehicles, signs). But eThey know sensor behaviour, calibration, and annotation rules for each application. are escalated with a defined reviewer protocol to ensure no corner case slips through.

Secure, Scalable, Enterprise-Grade Operations

Our infrastructure supports:

- ISO 27001 certified data handling

- Custom NDA and VPN setups

- Cloud-based collaboration

- Scalable teams (50–500+) trained on multi-sensor use cases

Results That Speak: The Metrics Behind Our Impact

- 4M+ annotated multi-modal frames

- 99.3% cross-sensor annotation accuracy

- 1500+ annotators skilled in sensor fusion workflows

- 100+ documented edge-case templates reused across projects

Where It Works: High-Impact Multi-Sensor Applications

Autonomous Vehicles

- Annotating pedestrians hidden by obstructions

- Resolving radar-camera conflicts

- Verifying road lanes under poor lighting

Drone Inspections

- Mapping infrastructure via RGB-LiDAR overlays

- Detecting defects on solar panels

- Combining thermal and topographic data for terrain analysis

Indoor Robotics

- Navigating tight warehouse paths

- Recognizing movable vs static objects

- Fusing IMU data for accurate localization

Smart Cities

- Tracking traffic across CCTV + radar

- Identifying rule violations

- Categorizing vehicle types by sensor fusion

What It Looks Like: Annotation in Action…

We include multiple visual dashboards and diagrams for clients:

- Sensor Fusion Stack – showing synchronized RGB, LiDAR, and radar layers.

- Annotation Workflow – from raw data intake to HITL review stages.

- Before vs After Frames – showing AI pre-labels refined by human QA.

Looking Ahead: Why Precision Needs People + Platforms

To create truly intelligent AI systems, you need training data that reflects the world’s full complexity. This is why NextWealth, powered by Taskmonk, delivers annotated datasets that blend automation efficiency with human precision.

We don’t just label objects; we interpret multi-dimensional sensor data with context, experience, and domain fluency.

Let’s Build AI That Truly Understands Its World

If you need high-quality annotated data for autonomous fleets, inspections, or traffic analysis, trust NextWealth and Taskmonk.