How HITL Improves eCommerce Search Relevance

We often think eCommerce search relevance is just another function that helps with product discovery. However, given that eCommerce is a highly competitive industry, search relevance is the function that can make or break a user’s shopping experience.

According to a Forrester report, 43% of retail shoppers head directly to the search bar, and their likelihood of purchasing increases significantly if the search experience is optimized accordingly. What this means is when shoppers search for a product, they expect instant, accurate results. Failure to deliver relevant results leads to frustration, cart abandonment, and loss of revenue.

This is where Human-in-the-Loop (HITL) methodologies come into play. By bringing human intelligence into machine learning (ML) systems, HITL offers a promising solution to better eCommerce search relevance. Unlike traditional search algorithms that rely solely on automated processes, HITL injects contextual understanding, domain expertise, and real-time adaptability, all elements that are critical for nuanced and effective search results.

Challenges in Achieving Optimal Search Relevance

Despite advancements in modern search algorithms, eCommerce site search relevance remains a complex code to crack. Listed below are some of the common and most persistent hurdles.

Ambiguous Queries

Shoppers often use search terms that are open to multiple interpretations. For instance, a search term such as ‘serum’ could have multiple connotations depending on the shopper’s age, gender or even a specific use case. It could either refer to a hair growth treatment or even a medical-grade product.

Automated systems lack the contextual awareness to distinguish between these meanings, leading to irrelevant results and in turn, user frustration. Understanding such queries requires more than better natural language processing. There must be a clear understanding of user behavior, which is where human insight becomes critical.

Dynamic Product Catalogs

eCommerce platforms operate in an environment of constant instability. New products are added, older ones go out of stock, prices change, and descriptions are updated often. This creates a moving target for search engines, ensuring that indexes are always current. An out-of-date index may surface products that are unavailable or overlook newly listed items altogether.

Automated systems, while fast, often struggle to reflect these real-time changes with precision, particularly when product taxonomy or categorization lacks consistency. Human reviewers can help identify and correct such inconsistencies more effectively than a machine alone.

Understanding User Intent

Every user search query is an example of an intent. This intent can either be easy to comprehend or it can sometimes be implicit or what is often called “fuzzy”. Imagine someone searching for “sneakers under 2000”. This is clearly a price-sensitive query. Now, imagine someone typing “work-from-home setup”. They would, most likely, expect a curated list of items including desks, chairs, webcams, and ring lights.

It is important to note here that this is a highly contextual expectation that is difficult for an algorithm to deduce. Without a nuanced understanding of these intent signals, search results purely driven by machine learning can miss the mark. Automated models often use past behavior to make guesses, but only a human can truly understand the multi-faceted nature of human needs, especially when phrased vaguely.

Handling Long-Tail Queries

Long-tail queries are specific, low-frequency search terms that don’t occur often enough in the data for a machine learning model to learn from effectively. Examples include “eco-friendly stainless steel lunch box with bamboo lid” or “bohemian-style floor lamp with warm LED lighting.” While these queries are often high-intent and conversion-prone, automated systems may return generic or irrelevant results due to inadequate training data. Long-tail queries are also where personalization and deep product knowledge matter most — a space where human curation and annotation can dramatically increase the relevance and richness of search responses.

Understanding Product Attributes and Catalog Complexity

eCommerce platforms often have vast and diverse product catalogs, containing millions of items with varying attributes, specifications, and categorizations. Accurately interpreting and indexing these product details is crucial if the end goal is to show relevant search results.

Most machine learning algorithms rely on keyword matching and structured data fields. However, this approach can fall short when product information is inconsistent, incomplete, or described differently across listings. For instance, one seller might list a product as a “wireless mouse,” while another lists it as a “cordless mouse.” Without a nuanced understanding of such variations, search engines may fail to associate these listings appropriately, leading to fragmented or irrelevant search outcomes.

Catalogs often vary in price, availability, and descriptions depending on the region where a shopper is located. In such cases, Human-in-the-Loop (HITL) processes ensure that product updates take into consideration region-specific expectations and reduce confusion among shoppers.

Moreover, products often have attributes that require contextual interpretation. A search for “eco-friendly running shoes” necessitates understanding the product category as well as environmental certifications, materials used, and manufacturing processes. Capturing and interpreting such multifaceted information requires advanced semantic analysis and comprehensive product knowledge.

All these factors highlight the limitations of fully automated systems and set the stage for the value of HITL.

Integrating HITL in Search Systems

So, what exactly is Human-in-the-Loop?

HITL is a machine learning approach that utilizes human judgment to train, fine-tune, and validate AI models. Rather than replacing humans, AI and HITL work together, where machines perform the bulk of the computation, while humans improve, correct, and contextualize. In the context of e-commerce search relevance, HITL plays a transformational role at several important stages.

Key Stages of HITL in Search Systems

Data Annotation: The Foundation of Search Relevance

High-quality data annotation is the basis of any effective machine-learning model. In eCommerce, this involves:

- Tagging products with categories, attributes (e.g., color, material, size), and unique product propositions (e.g., “eco-friendly”, “best for gifting”) that follow standard conventions

- Labeling search queries with user intent or expected outcomes. For instance, a “smartwatch” can be used for fitness tracking (with emphasis on heart rate, step count, water resistance), or productivity (calendar sync, call alerts, and voice assistance features), or simply as a fashion accessory.

- Curating training data to reflect diverse user demographics, preferences, and seasonal behaviors.

Human annotators bring the contextual awareness needed to make these judgments accurately. Their input ensures that the model is trained on a representative and meaningful data set, reducing bias and facilitating generalization across use cases.

Model Training and Supervised Feedback Loops

Once a labeled dataset is available, machine learning models can be trained to map queries to the most relevant products. However, model training is not a one-and-done process—it’s ever-evolving. This is where HITL comes in.

- Human reviewers evaluate model outputs to evaluate relevance, quality, and fairness.

- Supervised feedback loops allow humans to correct erroneous predictions (e.g., irrelevant search results or misclassified items), which the model then incorporates in the next training cycle.

- Edge cases such as ambiguous or long-tail queries can be supervised by humans, focusing retraining efforts where they are most impactful.

This continuous validation cycle significantly improves the model’s ability to understand intent and context, both of which are critical in eCommerce.

Active Learning: Efficient Human Involvement

In active learning frameworks, the model flags uncertain predictions and sends them to human annotators for review. This targeted approach:

- Reduces annotation load by focusing only on difficult or high-impact cases.

- Speeds up training iterations by involving human insight wherever the machine algorithm is unsure.

- Identifies rare or ambiguous search scenarios that lack sufficient training data.

For example, if the model consistently struggles to understand what users mean by “minimalist home decor,” HITL intervention can clarify which products fit this aesthetic, enriching the system’s learning.

Real-Time or Near-Real-Time Human Feedback

In live environments, HITL can also function as a real-time feedback mechanism. Evaluators can audit and score live queries based on relevance by checking if a search result is a perfect match, a partial match or is irrelevant. They can also monitor popular search trends to spot discrepancies in search relevance.

This level of human supervision ensures that even when machine models are deployed at scale, there is still an ongoing mechanism in the back end to course-correct, personalize, and refine based on user behavior and business needs.

Post-Deployment Evaluation and Continuous Improvement

Human input continues to be invaluable in post-deployment stages.

- A/B testing and user surveys offer insight into user satisfaction with search relevance.

- Expert audits can periodically review performance against business goals and customer experience benchmarks.

Including qualified human teams into the eCommerce search lifecycle can help create a system that continually learns, evolves, and scales with both user needs and catalog complexity.

Technical Implementation of HITL in eCommerce Search

Implementing HITL is not just about hiring a team of data annotators. It requires a strategic, well-integrated system architecture. Here’s a breakdown of how HITL is typically implemented in an eCommerce search environment.

Query Intent Annotation

HITL annotators assess and clarify complex queries such as “joggers” — which could mean men’s activewear, women’s loungewear, or even children’s clothing. This disambiguation ensures that the labeled intent accurately reflects the category, demographic, and occasion, which improves personalization and recall accuracy.

Relevance Grading for Ranking Models

Human annotators grade the relevance of query-product matches on a scale from 0 to 4, for the training and evaluation of learning-to-rank (LTR) models, such as XGBoost or BERT-based ranking systems. For instance, when ranking results for “USB adapters under 500,” it is essential to evaluate not just keyword matching but also the relevance of pricing and features across platforms.

Facet & Attribute Validation

Human reviewers verify filters, such as “water resistant” in bags and “skill level” in digital cameras, to ensure that products are displayed under the correct categories. This validation helps prevent user frustration caused by empty or irrelevant filter results.

SERP Quality Audits

Annotators identify poor search results, such as duplicate entries, irrelevant products (for example, a “Bluetooth speaker” showing USB hubs), or important omissions. This detailed feedback improves ranking methods, indexing logic, and query preprocessing.

Typo and Variant Query Normalization

HITL teams can address spelling mistakes and regional variants (such as “tshirt,” “tee-shirt,” and “T shirt”). Annotators help create normalization dictionaries and label user-preferred variants across different regions.

Edge Case Identification for Model Retraining

For queries with sparse or ambiguous training data, like “eco-friendly dishwasher tablets,” samples labeled by humans support active learning loops for algorithms, which help stabilize model performance in long-tail scenarios.

Visual-Aware Search Feedback

Annotators evaluate the relevance of images to queries in visually-driven categories, such as fashion or home decor (for example, determining if “art deco wallpaper” is aesthetically and stylistically aligned with the search query). This feedback supports the development of multimodal search models.

The Impact of HITL on eCommerce Search KPIs

The effectiveness of HITL is measured by its impact on eCommerce search performance metrics such as

Precision@k

This metric evaluates the relevance of the top K results returned for a user query. Precision@k tends to see a significant increase with the implementation of human-labeled data and feedback loops.

Click-Through Rates (CTR)

CTR reflects user engagement with search results. By refining product rankings based on human expertise, HITL can help align results more closely with what users expect to see, thus driving up CTRs.

For example, a HITL-enhanced model might learn that users searching for “laptop stand” prefer ergonomic, adjustable designs over basic fixed ones, even if the latter have better keyword matches.

Conversion Rates

It has been seen that users who utilize site search demonstrate higher purchase intent. According to a study by Nacho Analytics, Amazon’s conversion rate increases 6x when visitors do a site search.

Normalized Discounted Cumulative Gain (NDCG) @K

NDCG is a quality performance metric that considers the existence of an ideal order where all relevant items are at the top of the list. NDCG considers values of 0 to 1, where 1 indicates a perfect match, and lower values represent a lower quality of ranking. K means the top K ranked item of the list, and only top K relevance contributes to the final calculation.

Mean Average Precision (MAP)

MAP is another search relevance ranking quality metric where the number of relevant search results and their position in the list is taken into consideration. MAP considers values from 0 to 1, where a higher value correlates to better performance.

Query Latency

Query latency is the time taken to analyse a search query and return the relevant results. Query latency depends on certain factors such as the complexity of a search term, the dataset available for it, and the efficiency of the catalog management system. The shorter the query latency, the better the performance.

Zero-result Rate (ZRR)

ZRR measures the percentage of search queries that return no results, often signaling either a recall problem in the search algorithm or missing content in the product index.

By incorporating HITL approaches, such as human-curated search results and continuous feedback cycles, retailers can further refine eCommerce site search relevance, thereby improving conversion rates.

Bounce Rates

Bounce rates tend to increase when users are shown irrelevant search results, zero-result pages, or when the site navigation is confusing with slow-loading content. On the other hand, bounce rates decrease when search algorithms return accurate, personalized results quickly and intuitively. Employing HITL strategies such as search result curation and addressing zero-result queries, retailers can reduce bounce rates by ensuring users find relevant products efficiently.

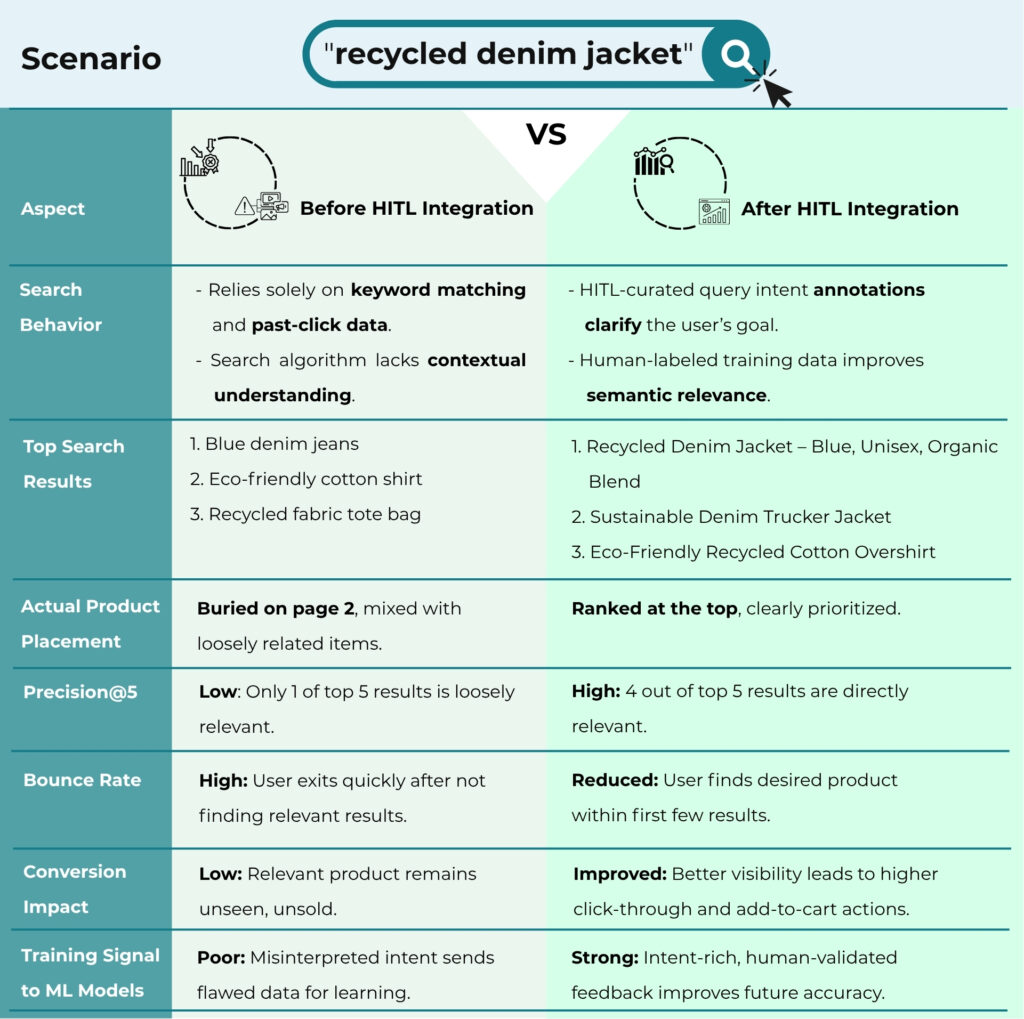

Before/After HITL

Let’s take a look at how the integration of a HITL approach can improve eCommerce search relevance, using a before/after scenario. For this example, we’ll use EcoFashion, a hypothetical mid-sized online retailer specializing in sustainable fashion.

Why This Matters

This example shows how HITL doesn’t just “correct” the model — it makes the system smarter, faster, and more in tune with how humans search. It’s particularly beneficial for

- Complex queries (e.g., “organic linen summer dress with pockets”)

- Seasonal or trending products

- Small catalogs with niche inventory, and

- Ambiguous terms or mixed intent searches

The evolution of eCommerce site search is no longer just about faster algorithms. It’s about intelligent curation, subtle understanding, and real-time adaptability, qualities best achieved when humans are part of the equation.

HITL is not a temporary fix; it’s a transformative approach that ensures relevance and context remain central to user experience. As human-in-the-loop machine learning continues to mature, ongoing research and development is important to consolidate the relationship between human expertise and automated systems.

While HITL approaches can significantly improve eCommerce search relevance, they require careful implementation—combining domain expertise, scalable annotation measures, and advanced ranking diagnostics. That’s where NextWealth comes in.

Our HITL-driven evaluation frameworks are designed to handle large scale query-product pair assessments, addressing challenges like long-tail and compositional queries, fuzzy or ambiguous search intents, and multilingual inputs. We apply graded relevance scoring, NDCG-based ranking evaluation, and failure mode diagnostics to identify precision gaps and support intelligent retraining pipelines. With capabilities that span active learning, ranking optimization, and continuous quality monitoring, we provide scalable, cost-effective solutions that help search systems adapt to real-world complexity.

If you’d like to stay competitive and redefine what a truly intuitive shopping experience looks like, get in touch with us today.

https://blog.somacap.app/story/the-future-of-ecommerce-search

https://labelbox.com/guides/how-to-improve-search-relevance

https://labelbox.com/guHow to use AI to improve website search relevanceides/human-in-the-loop/